protalos - diving into kubernetes with talos, proxmox and terraform

I recently got my hand on a new small used machine which made me want to redo a part of my homelab once again. Since quite some time i wanted to improve my skills administrating and using kubernetes and had read lots of good things about talos. For my homelab i almost always choose proxmox to virtualize. Since a few years im using terraform / opentofu in production, so why not also in my homelab since it is also meant to hone my skills for real world deployments.

Proxmox and terraform

Looking at the options for terraform providers there are currently two big ones:

Telmate/proxmox and bgp/proxmox.

After some experiments i decided to use bgp/proxmox, since i had a few issues using Telmate/proxmox with my proxmox instance (version 8.2.2). Both don't seem to be really feature complete, but it also seems to be that the proxmox backend does not support some things without ssh and user + password which is pretty bad. If there would be a wishlist for proxmox 9, i'd like to put a feature complete terraform provider integration or an API which make a community developed one possible.

Talos and the image factory

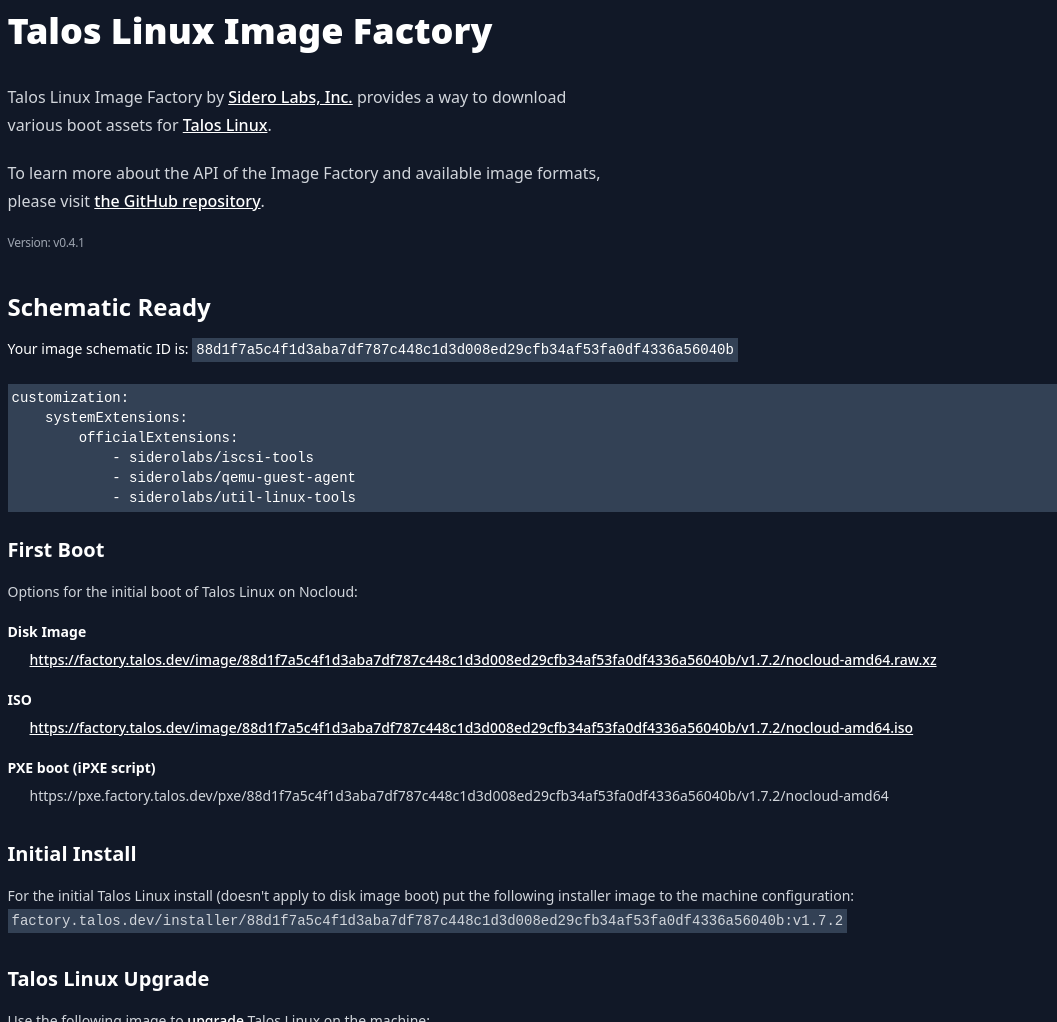

Now for the image, i needed some enhancements which are not included in the default iso or raw image. Fortunately does the talos team provide the image factory service which saved me a some headaches otherwise i would have to use i.e. packer and extend the image or create my own. To create our image we will follow the creation wizard using the options

- Hardware Type: Cloud Server

- Version: 1.7.2

- Cloud: nocloud

- Machine Architecture: amd64

- System Extensions:

- siderolabs/qemu-guest-agent

- For retrieveing the ip when using dhcp

- siderolabs/iscsi-tools

- Needed for longhorn

- siderolabs/util-linux-tools

- Needed for longhorn

- siderolabs/qemu-guest-agent

- Customization

- (empty)

The result should look something like this:

Another great thing about talos is the terraform provider which makes it possible to go from no cluster, to bootstrapped, to provisioned services (using the kubernetes and helm provider) without any manual intervention. For users to of flux and ArgoCD this also means that it is possible to restore a cluster and the infrastructure in one go which is pretty awesome.

storage, networking and services rundown

For storage i used longhorn which means i needed the iscsi and linux tools extension (see the documentation) i picked in the previous paragraph. For networking i used cilium since i heard a lot of good things about it and it provides a LB implementation i can use (https://docs.cilium.io/en/stable/network/lb-ipam/). I disabled hubble in the cilium helm chart configuration and chose neuvector for observability. For CD i chose ArgoCD and i added Harbor as registry but mostly to be used as a pull through cache. Lastly to expose the dashboards i used ingress-nginx mostly to try it out since i almost exclusively used traefik. Since traefik normally has acme already included i also added cert-manager. Finally i also found kubernetes/external-dns which is so far really awesome. It manages your DNS entries in almost all big DNS providers and even in pihole which was perfect for my homelab.

problems and solutions

During the development i had a time where the image factory didn't work which made it so, that terraform plan blocked, since proxmox couldn't read the filename anymore. Fortunately the image factory is opensource as well and i hacked a small docker-compose.yml together to build the ISO locally.

The longhorn service blocks destruction of the cluster in multiple ways. This isn't really a problem since manual deletion of the namespace or rerunning the uninstaller is not too annoying.

The terraform kubernetes provider does not support manifests for CRDs without the cluster being up during planning. This is a big problem since cilium needs the IP pool configured and cert manager needs an issuer. Sure you can configure the cert manager issuer later using flux/ArgoCD and the IP pool too (this would mean to move the ingress-nginx as well otherwise it won't complete the helm install, since no IP can be assigned) but having a single run for creation and deletion would be great. The solution is using the kubectl provider. Make sure you pick the alekc/kubectl one, since the other one doesn't seem too be maintained and you will run into all kinds of problems using it.

outro and backups

So far the cluster has been stable and it was pretty enjoyable to set it up. At the start i allocated to little resources which made me pull my hair out for some time, since some of the error messages weren't really obvious or the nodes just didn't get ready or managed to start kubelet. Otherwise it has been a great experience. I also added velero to push backups of my control plane data to my minio instance. For pvc backups i use longhorn directly.

resources which helped a lot

- Talos on Proxmox by the talos team

- Longhorn Talos support by the Longhorn team

- cilium IPAM by the cilium team

- cert-manager acme documentation by the cert-manager team

- ArtifactHub for helm options and skimming

- Longhorn

- cilium

- cert-manager

- ArgoCD

- external-dns

- ingress-nginx

- neuvector

- harbor (using the bitnami one, since there is also an arm64 image in case i want to move to arm in the future)